This content refers to the previous stable release of PyMVPA.

Please visit

www.pymvpa.org for the most

recent version of PyMVPA and its documentation.

clfs.sg.svm

Module: clfs.sg.svm

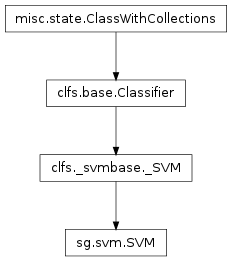

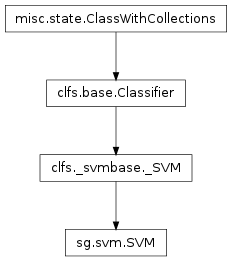

Inheritance diagram for mvpa.clfs.sg.svm:

Wrap the libsvm package into a very simple class interface.

-

class mvpa.clfs.sg.svm.SVM(kernel_type='linear', **kwargs)

Bases: mvpa.clfs._svmbase._SVM

Support Vector Machine Classifier(s) based on Shogun

This is a simple base interface

Note

Available state variables:

- feature_ids: Feature IDS which were used for the actual training.

- predicting_time+: Time (in seconds) which took classifier to predict

- predictions+: Most recent set of predictions

- trained_dataset: The dataset it has been trained on

- trained_labels+: Set of unique labels it has been trained on

- trained_nsamples+: Number of samples it has been trained on

- training_confusion: Confusion matrix of learning performance

- training_time+: Time (in seconds) which took classifier to train

- values+: Internal classifier values the most recent predictions are based on

(States enabled by default are listed with +)

See also

Please refer to the documentation of the base class for more information:

_SVM

Interface class to Shogun’s classifiers and regressions.

Default implementation is ‘libsvm’.

SVM/SVR definition is dependent on specifying kernel, implementation

type, and parameters for each of them which vary depending on the

choices made.

Desired implementation is specified in svm_impl argument. Here

is the list if implementations known to this class, along with

specific to them parameters (described below among the rest of

parameters), and what tasks it is capable to deal with

(e.g. regression, binary and/or multiclass classification).

| Implementations : |

|---|

| |

- libsvr : LIBSVM’s epsilon-SVR

Parameters: C, tube_epsilon

Capabilities: regression

- gnpp : Generalized Nearest Point Problem SVM

Parameters: C

Capabilities: binary

- libsvm : LIBSVM’s C-SVM (L2 soft-margin SVM)

Parameters: C

Capabilities: binary, multiclass

- gmnp : Generalized Nearest Point Problem SVM

Parameters: C

Capabilities: binary, multiclass

- gpbt : Gradient Projection Decomposition Technique for large-scale SVM problems

Parameters: C

Capabilities: binary

|

Kernel choice is specified as a string argument kernel_type and it

can be specialized with additional arguments to this constructor

function. Some kernels might allow computation of per feature

sensitivity.

| Kernels : |

- rbf

gamma

- rbfshift

gamma, max_shift, shift_step

- linear : provides sensitivity

No parameters

- sigmoid

cache_size, coef0, gamma

|

|---|

| Parameters: |

- tube_epsilon – Epsilon in epsilon-insensitive loss function of epsilon-SVM

regression (SVR). (Default: 0.01)

- C – Trade-off parameter between width of the margin and number of

support vectors. Higher C – more rigid margin SVM. In linear

kernel, negative values provide automatic scaling of their value

according to the norm of the data. (Default: -1.0)

- shift_step – Shift step for SGs GaussianShiftKernel. (Default: 1)

- max_shift – Maximal shift for SGs GaussianShiftKernel. (Default: 10)

- epsilon – Tolerance of termination criteria. (For nu-SVM default is 0.001).

(Default: 5e-05)

- cache_size – Size of the kernel cache, specified in megabytes. (Default: 100)

- coef0 – Offset coefficient in polynomial and sigmoid kernels. (Default: 0.5)

- gamma – Scaling (width in RBF) within non-linear kernels. (Default: 0)

- num_threads – Number of threads to utilize. (Default: 1)

- retrainable – Either to enable retraining for ‘retrainable’ classifier. (Default:

False)

- enable_states (None or list of basestring) – Names of the state variables which should be enabled additionally

to default ones

- disable_states (None or list of basestring) – Names of the state variables which should be disabled

- kernel_type (basestr) – String must be a valid key for cls._KERNELS

|

|---|

-

svm

Access to the SVM model.

-

traindataset

Dataset which was used for training

TODO – might better become state variable I guess

-

untrain()