|

Multivariate Pattern Analysis in Python |

|

Multivariate Pattern Analysis in Python |

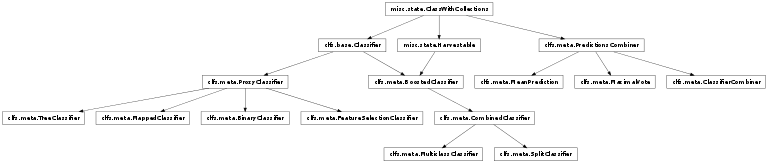

Inheritance diagram for mvpa.clfs.meta:

Classes for meta classifiers – classifiers which use other classifiers

Meta Classifiers can be grouped according to their function as

| group BoostedClassifiers: | |

|---|---|

| CombinedClassifier MulticlassClassifier SplitClassifier | |

| group ProxyClassifiers: | |

| ProxyClassifier BinaryClassifier MappedClassifier FeatureSelectionClassifier | |

| group PredictionsCombiners for CombinedClassifier: | |

| PredictionsCombiner MaximalVote MeanPrediction | |

Bases: mvpa.clfs.meta.ProxyClassifier

ProxyClassifier which maps set of two labels into +1 and -1

Note

Available state variables:

(States enabled by default are listed with +)

| Parameters: |

|

|---|

Bases: mvpa.clfs.base.Classifier, mvpa.misc.state.Harvestable

Classifier containing the farm of other classifiers.

Should rarely be used directly. Use one of its childs instead

Note

Available state variables:

(States enabled by default are listed with +)

See also

Please refer to the documentation of the base classes for more information:

Initialize the instance.

| Parameters: |

|

|---|

Used classifiers

Return an appropriate SensitivityAnalyzer

Untrain BoostedClassifier

Has to untrain any known classifier

Bases: mvpa.clfs.meta.PredictionsCombiner

Provides a decision using training a classifier on predictions/values

TODO: implement

Note

Available state variables:

(States enabled by default are listed with +)

See also

Please refer to the documentation of the base class for more information:

Initialize ClassifierCombiner

| Parameters: |

|

|---|

It might be needed to untrain used classifier

Bases: mvpa.clfs.meta.BoostedClassifier

BoostedClassifier which combines predictions using some PredictionsCombiner functor.

Note

Available state variables:

(States enabled by default are listed with +)

See also

Please refer to the documentation of the base class for more information:

Initialize the instance.

| Parameters: |

|

|---|

Used combiner to derive a single result

Provide summary for the CombinedClassifier.

Untrain CombinedClassifier

Bases: mvpa.clfs.meta.ProxyClassifier

ProxyClassifier which uses some FeatureSelection prior training.

FeatureSelection is used first to select features for the classifier to use for prediction. Internally it would rely on MappedClassifier which would use created MaskMapper.

TODO: think about removing overhead of retraining the same classifier if feature selection was carried out with the same classifier already. It has been addressed by adding .trained property to classifier, but now we should expclitely use isTrained here if we want... need to think more

Note

Available state variables:

(States enabled by default are listed with +)

Initialize the instance

| Parameters: |

|

|---|

Used FeatureSelection

Used MappedClassifier

Set testing dataset to be used for feature selection

Untrain FeatureSelectionClassifier

Has to untrain any known classifier

Bases: mvpa.clfs.meta.ProxyClassifier

ProxyClassifier which uses some mapper prior training/testing.

MaskMapper can be used just a subset of features to train/classify. Having such classifier we can easily create a set of classifiers for BoostedClassifier, where each classifier operates on some set of features, e.g. set of best spheres from SearchLight, set of ROIs selected elsewhere. It would be different from simply applying whole mask over the dataset, since here initial decision is made by each classifier and then later on they vote for the final decision across the set of classifiers.

Note

Available state variables:

(States enabled by default are listed with +)

Initialize the instance

| Parameters: |

|

|---|

Used mapper

Bases: mvpa.clfs.meta.PredictionsCombiner

Provides a decision using maximal vote rule

Note

Available state variables:

(States enabled by default are listed with +)

See also

Please refer to the documentation of the base class for more information:

XXX Might get a parameter to use raw decision values if voting is not unambigous (ie two classes have equal number of votes

| Parameters: |

|

|---|

Bases: mvpa.clfs.meta.PredictionsCombiner

Provides a decision by taking mean of the results

Note

Available state variables:

(States enabled by default are listed with +)

See also

Please refer to the documentation of the base class for more information:

Bases: mvpa.clfs.meta.CombinedClassifier

CombinedClassifier to perform multiclass using a list of BinaryClassifier.

such as 1-vs-1 (ie in pairs like libsvm doesn) or 1-vs-all (which is yet to think about)

Note

Available state variables:

(States enabled by default are listed with +)

See also

Please refer to the documentation of the base class for more information:

Initialize the instance

| Parameters: |

|

|---|

Bases: mvpa.misc.state.ClassWithCollections

Base class for combining decisions of multiple classifiers

PredictionsCombiner might need to be trained

| Parameters: |

|

|---|

Bases: mvpa.clfs.base.Classifier

Classifier which decorates another classifier

Possible uses:

- modify data somehow prior training/testing: * normalization * feature selection * modification

- optimized classifier?

Note

Available state variables:

(States enabled by default are listed with +)

Initialize the instance

| Parameters: |

|

|---|

Used Classifier

Untrain ProxyClassifier

Bases: mvpa.clfs.meta.CombinedClassifier

BoostedClassifier to work on splits of the data

Note

Available state variables:

(States enabled by default are listed with +)

See also

Please refer to the documentation of the base class for more information:

Initialize the instance

| Parameters: |

|

|---|

Splitter user by SplitClassifier

Bases: mvpa.clfs.meta.ProxyClassifier

TreeClassifier which allows to create hierarchy of classifiers

Functions by grouping some labels into a single “meta-label” and training classifier first to separate between meta-labels. Then each group further proceeds with classification within each group.

Possible scenarios:

TreeClassifier(SVM(),

{'animate': ((1,2,3,4),

TreeClassifier(SVM(),

{'human': (('male', 'female'), SVM()),

'animals': (('monkey', 'dog'), SMLR())})),

'inanimate': ((5,6,7,8), SMLR())})

would create classifier which would first do binary classification to separate animate from inanimate, then for animate result it would separate to classify human vs animal and so on:

SVM

/ animate inanimate

/ SVM SMLR

/ \ / | \ human animal 5 6 7 8

| |

SVM SVM

/ \ / male female monkey dog

1 2 3 4

If it is desired to have a trailing node with a single label and thus without any classification, such as in

SVM/ g1 g2

- / 1 SVM

- / 2 3

then just specify None as the classifier to use:

TreeClassifier(SVM(),

{'g1': ((1,), None),

'g2': ((1,2,3,4), SVM())})

Note

Available state variables:

(States enabled by default are listed with +)

Initialize TreeClassifier

| Parameters: |

|

|---|

Dictionary of classifiers used by the groups

Provide summary for the TreeClassifier.

Untrain TreeClassifier